Hi all

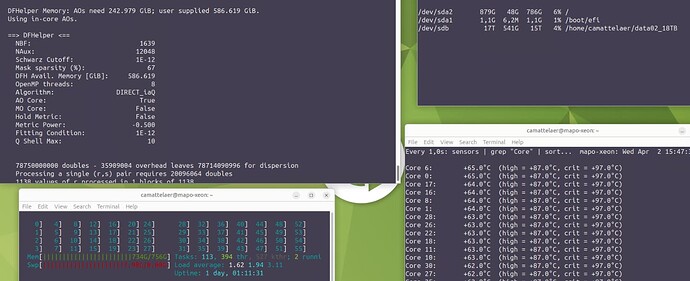

I am trying to figure out if this behaviour is expected, but i am running an FSAPT calculation on a system of 285 atoms and notice that the required memory is A LOT more than what DFhelper estimates, see screenhot.

For dispersion, DFhelper suspects 243 GB memory required which is a lot less than i am allowing to allocate, but as you can see in htop my memory is getting filled well over 700 GB. It also creates 540GB of scratch data on the disk itself (reported by df on the right-hand side of the screenshot). Unfortunately, psi4 goes on to completely fill the RAM resulting in a crash. There is however no mention in my slurm file nor .log file of OOM but i suspect this is still the case why the process is being killed.

Should the available memory be a sum of different memory estimates (e.g. during SCF calcs, F-sapt electrostatics, induction, exchance, dispersion, …) ?

Is there a way to offload more to disk rather than memory?

Kind regards and thanks for the help

Charles-Alexandre

fsapt.inp.txt (13.9 KB)

fsapt.log.txt (406 Bytes)

fsapt.out.txt (183.1 KB)